August Privacy Roundup

Here's our August newsletter with major events in June and July including the practical impacts of the EU-US DPF adequacy decision, the raft of cases involving Meta, our awesome new Rights module, and AI in the USA

You may have noticed we skipped our July newsletter covering June, apologies, so here’s a summer bumper edition! As always, so much to talk about with the new Privacy triumvirate of Transfers, Meta and AI in the headlines as ever. And we’ll look at our new Rights management module.

But first, the excellent news on the adequacy decision for the EU-US DPF and the practical benefits for you and your organisation!

Transfers to the USA are back on track!

On 10 July 2023, three years minus 6 days from the Schrems II decision, the European Commission released its adequacy decision for the EU-US Data Privacy Framework. Given the volume of transfers due to use of US cloud providers of all types, this is amazing news for everyone operationalising Privacy.

What is the DPF?

The US government’s website has a good backgrounder and a great FAQs page. In summary, the CJEU in Schrems II decided that Privacy Shield was invalid for two main reasons:

- US government surveillance and lack of redress, and

- lack of redress for individuals in the EEA whose data was surveilled.

The DPF fixes those two complaints with an Executive Order that limits what the US federal agencies can ask for, and introduces a court and appeal process for non-US individuals to seek redress.

As the rules and Principles in Privacy Shield weren’t really criticised in Schrems II, they’ve just been slightly iterated and are now the rules and Principles in the new DPF. There are 7 main Principles, 16 Supplemental Principles and an Annex 1 focussed on the arbitration model.

A US organisation needs to self-certify to the DPF, refer to it in their Privacy Policy, and comply with the rules and Principles.

An interesting wrinkle is that the DPF works on mutuality: the US also has to determine that the other jurisdiction provides protection from government surveillance, and redress, for US residents.

DPF adequacy – EEA

The DPF decision means that EEA organisations can now transfer personal data to organisations that are self-certified to the DPF and appear on the DPF List without needing to do a Transfer Impact Assessment (or TIA) under EU GDPR.

Don’t listen to anyone saying you also need SCCs to transfer from the EEA to the USA, or that it’s still illegal – you do not and it isn’t, in each case as long as your US importer is self-certified to the DPF and named on the List. Like Google.

DPF adequacy – UK

Because the UK GDPR is 99% identical to the EU GDPR, the UK and US have simply created an extension to the DPF (a so-called ‘UK-US Bridge’) to allow for transfers from the UK under UK GDPR. Why reinvent the wheel?

The UK-US Bridge is to the DPF what the UK Addendum is to the EU SCCs: it adopts the EU-US DPF in its entirety and says ‘we’ll have that, but replace the EU with the UK and EDPB with the ICO’.

So the rules and Principles in the UK-US Bridge are the EU-US DPF rules and Principles, you just need to read UK and ICO for EU and EDPB. But UK organisations can’t rely on that UK-US Bridge yet, we need to wait until the UK issues its own adequacy decision for the UK-US Bridge. Brexit benefits …

DPF adequacy – Switzerland

While the UK has the GDPR, albeit the UK GDPR, Switzerland’s law is not GDPR. So Switzerland has its own Swiss-EU DPF but again, like the UK-US Bridge, they’ve also taken the EU-US DPF rules and Principles and made minimal edits to cater for the fact Swiss law isn’t the GDPR (for example in the definition of special categories or sensitive personal data) and to refer to Switzerland and the Swiss regulator not the EEA and the EDPB.

Again, Swiss organisations will need to wait for the Swiss to issuer their own adequacy decision for the Swiss-US DPF.

DPF impact on SCCs for EU (and later UK) GDPR

An excellent impact of the DPF is that the measures put in place by the Executive Order behind it apply to any transfer to the USA from the EEA.

The European Commission themselves determined that the limitation on government surveillance, and the redress for EEA data subjects, brought in by the Executive Order, are sufficient measures to address Schrems II concerns on those two areas. So you can too.

You’ll still need to carry out all other GDPR requirements, for example on processors, but on the Schrems II concerns about surveillance and redress, you can rely on the adequacy decision finding that the DPF resolves them.

Schrems III?

NOYB have already stated they’ll challenge the DPF as quickly as possible. The US and EC teams are confident they dealt sufficiently with the CJEU’s two main criticisms. So this will be one to watch but, as no-one but the EC itself and the CJEU can take down an adequacy decision, you can rely for now on the EU-US DPF for your EEA-US transfers and the UK and Swiss equivalents when they arrive, to US entities named in the relevant DPF List. And you do not then need a TIA or SCCs.

Meta, Meta Meta

We’ve written before about Meta’s reluctant €1.2bn fine from Ireland’s DPC, when Meta claimed to use contract as a legal basis (often called ‘forced consent’ as there’s no choice).

- We’ve a really interesting breakdown of the €2.5bn that Meta’s been fined by the DPC in the last 3 years alone. That’s a huge figure. Ireland’s Defence budget in 2022 was €1.1bn.

- The fines definitely show that Privacy is not just Security. Only 1 of the 8 fines was about Security, and only 0.7% by euro.

- Privacy isn’t just Security. And – as we’ll see now – Privacy laws operate within a legal landscape alongside Competition law, IP law and in particular laws on Equality.

Meta then changed to legitimate interests as their legal basis for targeted, behavioural advertising, and this was shot down in the Competition law CJEU case we’ll see below.

We’ll see that it’s clearer each month that behavioural advertising needs consent, other legal bases won’t do.

Competition Law & GDPR Crossover

The 4 July 2023 CJEU decision against Meta is particularly interesting because this was a Competition law case that involved Data Protection, resulting from a decision by a competition authority in Germany, not a Data Protection law case resulting from a Privacy regulator.

It’s important to bear in mind this was about Meta combining the collection and processing of ‘off-Facebook’ personal data on Facebook users with ‘on-Facebook‘ personal data, ie: data about that user within Facebook itself, for personalised advertising and more, all based on the general terms of use of the Facebook service.

‘Off-Facebook’ includes collecting personal data on Facebook users when they were using other Meta properties such as Instagram and WhatsApp, and collecting personal data about Facebook users when they visited third party sites and apps, through the use of programming interfaces, cookies and similar technologies.

So this decision wasn’t simply about targeted advertising, it was about collecting off-Facebook data and combining that with on-Facebook data to analyse for personalised advertising.

Interesting points

- Competition authorities can review breaches of GDPR when looking at market abuse, just as they can review breaches of any other law.

- This wasn’t GDPR so no one-stop shop, so Meta US was also a party.

- Use of Facebook SDKs, tracking pixels and the like to behaviourally profile people logging into other sites and services was not in the reasonable expectation of the individual.

- You need to consider the nature of a site or service because data collected in this way about their visitors may itself be special categories from one site or service, such as a health clinic, but not another.

- Necessity for contract is not a valid legal basis for combining off-Facebook data with on-Facebook data to market to individuals, indeed consent is the only likely basis.

The CJEU made a strong statement in para 117 of the judgement, which is arguably obiter in legal jargon as it’s not directly about the processing in the case. The CJEU clearly stated that behavioural advertising, at least on social media networks, can only happen with consent:

In this regard, it is important to note that, despite the fact that the services of an online social network such as Facebook are free of charge, the user of that network cannot reasonably expect that the operator of the social network will process that user’s personal data, without his or her consent, for the purposes of personalised advertising. In those circumstances, it must be held that the interests and fundamental rights of such a user override the interest of that operator in such personalised advertising by which it finances its activity, with the result that the processing by that operator for such purposes cannot fall within the scope of [legitimate interests].

And indeed, Meta have announced that it is moving from legitimate interests (they were using contract) to consent.

Meta’s behavioural advertising banned in Norway

In light of the DPC order banning use of contract, and the CJEU banning use of legitimate interests, for behavioural advertising, as from 4 August 2023 Norway’s data protection regulator has ordered a 3-month ban on behavioural advertising to Norway’s Facebook users until Meta change their system to consent.

It’s worth quoting the main paragraph at length, we’ve split it up to make it easier to read:

‘… this letter imposes a temporary ban on Meta’s processing of personal data of data subjects in Norway for targeting ads on the basis of observed behaviour (hereinafter “Behavioural Advertising”) for which Meta relies on [contract or legitimate interests]. For the avoidance of doubt, Behavioural Advertising includes targeting ads on the basis of inferences drawn from observed behaviour as well as on the basis of data subjects’ movements, estimated location and how data subjects interact with ads and user-generated content. This definition is in line with our understanding of the scope of the IE Decisions.

Please note the limitations of the scope of our order. The order does not in any way ban Meta from offering the Services in Norway, nor does it preclude Meta from processing personal data for advertisement purposes in general. Only Behavioural Advertising as defined above is affected to the extent that it is based on 6(1)(b) or 6(1)(f) GDPR.

Practices such as targeting ads based on information that data subjects have provided in the “About” section of their user profile, or generalised advertising, is out of scope of this order. For example, the order does not itself prevent an advertising campaign on Facebook which, based on profile bio information, targets ads towards females between 30 and 40 years of age residing in Oslo and who have studied engineering.’

Practical impacts on your advertising

- Review where you profile online and offline users and where you combine data from different sources, even your own sources.

- Do or review your DPIA on that processing, including reasonable expectations of users.

- Review the legal basis you’re relying on, particularly in light of the DPC and CJEU decisions against Meta, and Norways ban.

- If you’re doing behavioural advertising, consent is likely the only way to go.

- For other purposes, check your context, legal basis and transparency to individuals concerned.

Rights in Keepabl

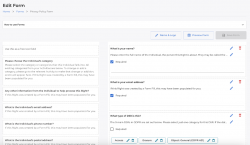

We’re excited to announce that Rights have come to Keepabl! We’ve always supported you with reports on where you process about which data subjects, but we now have a full-blown Rights module integrated within Keepabl.

We’re calling it Rights not Data Subject Rights because it’s a global module, not restricted to GDPR. And we’ve moved to calling people Individuals instead of Data Subjects to reduce jargon.

Create as many Forms as you want to intake Rights into Keepabl, with tailored Forms for your Privacy Policy, your Customer Support team, all feeding into the Rights Records in Keepabl. We were going to call these ‘Petals’ as they’re like the petals of a flower all feeding back into the same stem, but Marketing said no. They’re Forms (very fragrant forms).

And of course, you can always submit Rights directly into Keepabl, control who see what with tailored My Rights dashboards for your Users and Rights Log for Admins.

And once a Right is entered into Keepabl, you have instant email alerts to you and your team, and our solution leads you through the process of managing each Right.

Come see for yourself, book your demo now!

AI & the USA

White House & Voluntary AI Commitment

On 21 July 2023, the White House announced that President Biden had convened ‘seven leading AI companies at the White House today – Amazon, Anthropic, Google, Inflection, Meta, Microsoft, and OpenAI – to announce that the Biden-Harris Administration has secured voluntary commitments from these companies to help move toward safe, secure, and transparent development of AI technology.’

You’ll recall that the EU is moving forward to regulate AI, whereas the UK and US are more laissez-faire so they do not slow down advances and remain competitive locations for AI development.

The three principles are:

- Ensuring Products are Safe Before Introducing Them to the Public

- Building Systems that Put Security First

- Earning the Public’s Trust

These limited principles seem blindingly obvious. They also don’t include being a good actor when training and creating AI, such as not infringing Privacy laws when scraping data to train models, nor infringement of copyright and other IP in the process.

US Blueprint for an AI Bill of Rights

The Whitehouse AI Commitment came after President Biden released the Blueprint for an AI Bill of Rights, a much fuller document built around five principles:

- Safe and Effective Systems

- Algorithmic Discrimination Protections

- Data Privacy

- Notice & Explanation

- Human Alternatives, Consideration, and Fallback

There’s a few IP and competition investigations into OpenAI, but you can see the overlap with Privacy principles and automated processing rules in GDPR, which is why Privacy is at the forefront of enforcement and investigation on AI.

The AI Bill of Rights came after the release of NIST’s AI Risk Management Framework and more.

AI enforcement

Al of this does demonstrate how AI is being treated differently by the US (and the UK is following its lead), even though current laws already apply.

As the IAPP reports, This was reiterated back in April at the IAPP IAPP Global Privacy Summit 2023 by the US FTC’s Commissioner Alvaro Bedoya, who noted:

“First, generative AI is regulated. Second, much of that law is focused on impacts to regular people. Not experts, regular people. Third, some of that law demands explanations. ‘Unpredictability’ is rarely a defense. And fourth, looking ahead, regulators and society at large will need companies to do much more to be transparent and accountable.“

Practical impact

- There’s not much change in law – nor the fact lots of laws already apply. So check your DPIAs when using AI, in particular how you obtain and use training data.

- Have a look at social media on Zoom’s clumsy change to its terms of service on training AI on Customer Data, and their subsequent row back, to see how not to handle clarity and legal drafting in your terms of service.

- If you’re in the USA, check if the various biometric laws (Illinois, Texas etc) or specific AI laws (NYC on recruitment use etc) apply to you.

- If you’re in the EEA or UK, or your AI is used there, keep an eye on the EU AI Act, which is still draft but now in the trilogue process.

Don’t forget to signup!

Subscribe to our newsletter to get these practical insights into your inbox each month (ok, so we missed July…)

Related Articles

Blog

Keepabl at the Global RegTech Summit 2023

We’re one of the very few solutions named to the global RegTech100 for 3 years running so we were delighted to be selected to demo our Privacy Management Software at…

Blog

Google USA takes control

Google’s recently announced that – due to Brexit – it’s changing data controller for UK users from Google Ireland to Google USA. This has led to some alarmist reporting. What’s…