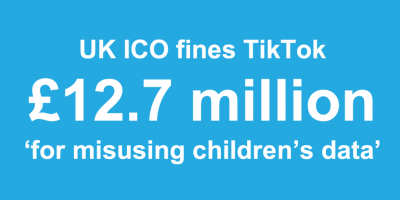

UK ICO fines TikTok £12.7 million for GDPR breaches on children's data

"TikTok should have known better. TikTok should have done better." John Edwards, UK Information Commissioner. Read our Founder's insights, first published in Thomson Reuters Regulatory Intelligence

Children’s data increasingly in focus: UK ICO fines TikTok £12.7 million for GDPR breaches

This article was first published in Thomson Reuters Regulatory Intelligence on 12 April 2023. Subscribers link. Free trial link.

“TikTok should have known better. TikTok should have done better.” John Edwards, UK Information Commissioner.

TikTok, which had already been banned from government devices by the United States, the UK, the European Commission, the EU Council and other countries, was fined £12.7 million by the UK Information Commissioner’s Office (ICO) on April 4, 2023, for misusing children’s data in breach of its obligations in the General Data Protection Regulation (GDPR).

In this article the author reviews the history of the case, highlights other international developments, and sets out action points for firms that provide online services likely to be accessed by children and that process their personal data.

TikTok timeline: February 2019

In February 2019, the U.S. Federal Trade Commission (FTC) obtained the then-largest monetary settlement in a case on the U.S. Children’s Online Privacy Protection Act (COPPA). TikTok agreed to pay $ 5.7 million to settle claims it had breached COPPA and”illegally collected personal information from children”.

“The operators of Musical.ly — now known as TikTok — knew many children were using the app but they still failed to seek parental consent before collecting names, email addresses, and other personal information from users under the age of 13 … This record penalty should be a reminder to all online services and websites that target children: we take enforcement of COPPA very seriously, and we will not tolerate companies that flagrantly ignore the law,” Joseph Simons, the then chair of the FTC, said at the time (author’s emphasis).

In that same announcement, the FTC noted that there had been public reports of adults trying to contact children via the app, and stated that: “The operators of the [TikTok] app were aware that a significant percentage of users were younger than 13 and received thousands of complaints from parents that their children under 13 had created [TikTok] accounts, according to the FTC’s complaint.”

As well as the payment, TikTok agreed to comply with COPPA in future and to take offline all videos made by children who were under the age of 13.

Back to Europe: parental consent under GDPR

Article 8 of EU GDPR sets out that, when offering information society services (such as the TikTok app or online financial services)directly to a child below the age of 16 years, processing that child’s personal data shall be lawful only if and to the extent that consent is given or authorised by the holder of parental responsibility over the child.

That age can be reduced down to 13 by a member state’s national law; the UK took advantage of that provision, reducing the age to 13 under UK law (the same age as COPPA in the United States).

UK ICO’s Children’s code

The UK ICO is a recognised leader in protecting children’s rights and freedoms when their personal data is processed. This reflects the concerns of the UK public: the UK ICO’s own “national survey into people’s biggest data protection concerns ranked children’s privacy second only to cyber security”.

The UK ICO’s Children’s code (formally, the Age Appropriate Design Code) took effect on September 2, 2020, after the period to which the TikTok fine reportedly relates. It sets out 15 standards aimed at online services, such as apps, gaming platforms and web and social media sites, that are likely to be accessed by children.

The code is not law but it is a statutory code, required under UK data protection law, so that if firms fail to follow the code, it may be difficult to demonstrate compliance.

TikTok timeline: September 2022

More than three years after TikTok’s FTC settlement, on September 26, 2022, the ICO published a notice of intent to fine TikTok £27 million, stating that TikTok “may have breached UK data protection law, failing to protect children’s privacy when using the TikTok platform”.

The breaches reportedly related to the period from May 2018 to July 2020, extending 17 months beyond the FTC settlement.

A notice of intent is a provisional view only, but the UK ICO did state that the notice came at the end of an investigation that “found the company may have:

- processed the data of children under the age of 13 without appropriate parental consent;

- failed to provide proper information to its users in a concise, transparent, and easily understood way; and

- processed special category data, without legal grounds to do so.”

The Information Commissioner himself pushed the point home: “Companies providing digital services have a legal duty to put those

protections in place, but our provisional view is that TikTok fell short of meeting that requirement.”

TikTok timeline: April 2023

On April 4, 2023, the ICO announced it had fined TikTok £12.7 million, stating that there were “a number of breaches of data protection

law, including failing to use children’s personal data lawfully”. The ICO believes that up to 1.4 million children in the UK were using

TikTok in 2020, which, based on UK government statistics, amounts to considerably more than 10% of UK under-13 year-olds.

The ICO states that TikTok ought to have been aware that under-13s were using its platform — perhaps unsurprising given the FTC’s

findings in 2019. The UK ICO decided not only that this was contrary to TikTok’s terms of service, but also that:

- “Personal data belonging to children under 13 was used without parental consent”

- “TikTok ‘did not do enough’ to check who was using their platform and take sufficient action to remove the underage children.”

“Our findings were that TikTok was not doing enough to prevent under-13s accessing their platform, they were not doing enough when

they became aware of under-13s to get rid of them, and they were not doing enough to detect under-13s on there,” Edwards told the

Guardian newspaper.

Wider than TikTok

On April 4, 2023, also, Italy’s data protection authority ordered a temporary halt to ChatGPT’s processing of data subjects in Italy. The DPA will now investigate further.

The Italian supervisory authority “emphasises in its order that the lack of whatever age verification mechanism exposes children to receiving responses that are absolutely inappropriate to their age and awareness, even though the service is allegedly addressed to users aged above 13 according to OpenAI’s terms of service”.

Children’s rights are also being protected outside Europe. The California Age-Appropriate Design Code Act was signed into law on September 15, 2022, to take effect in 2024, drawing heavily on the UK’s ICO’s Children’s code.

3 action points

There are three main action points for firms.

1. Review online services and solutions to establish whether they are likely to be accessed by children (under 18) and, if so, of what age.

“This will depend upon whether the content and design of your service is likely to appeal to children and any measures you may have in place to restrict or discourage their access to your service,” the UK ICO has said.

2. Document the review and decision.

3. If it is likely that children will access the firm’s online services or solutions:

- depending on where the firm operates, review the UK ICO’s Children’s code, the California code, and other applicable laws and guidance;

- review the relevant age for parental consent in all jurisdictions in which the firm operates and ensure the firm’s procedures for obtaining and proving receipt of such consent are fit-for-purpose in each;

- perform a data protection impact assessment (DPIA) to assess and address risks, consulting the firm’s data protection officer (the UK ICO has guidance on DPIAs within the Children’s code and standalone DPIA guidance);

- And remediate any gaps to strengthen the firm’s privacy compliance.

Make Privacy Compliance Intuitive & Simple

If you’ve enjoyed these practical insights into this complex topic, why not see how Keepabl’s multi-award-winning Privacy Management Software can give you instant insights into your Privacy Compliance status, helping you create and maintain your GDPR governance framework and instantly highlighting gaps and creating needed reports.

Related Articles

Blog

Businesses admit to unethical data processing

A recent KPMG study, surveying 2,000 adults and 250 business leaders in the USA, reveals fascinating – and worrying – insights into how corporate data practices and consumer expectations are shifting. …

Blog

Time for a healthy kick up the GDPRse!

Now is the perfect time to get your GDPR-house in order. Why? Well, read on! The UK’s opening back up 19 July 2021 is set to be the day…